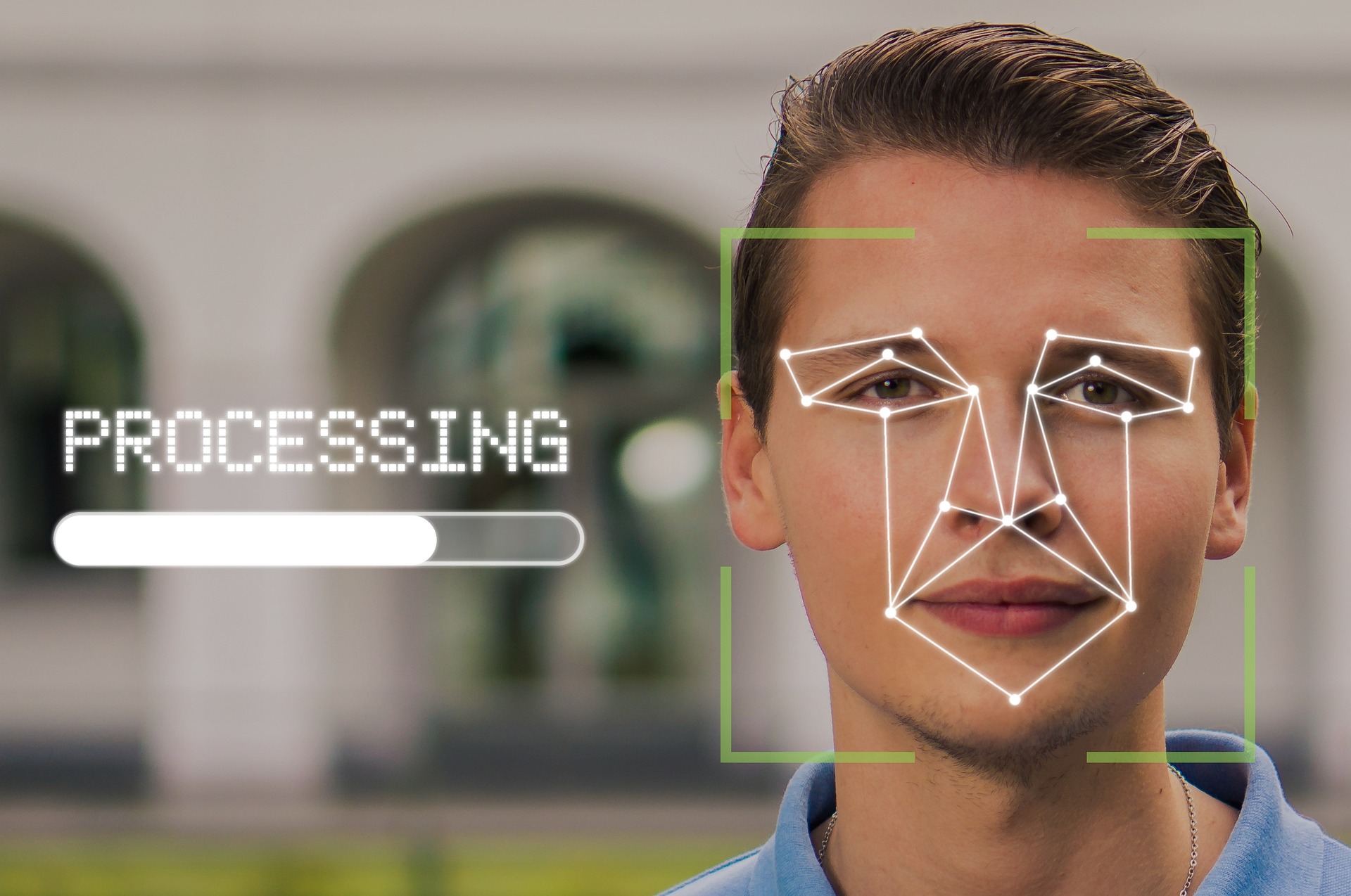

A big biometric security company in the UK, Facewatch, is in hot water after their facial recognition system caused a major snafu - the system wrongly identified a 19-year-old girl as a shoplifter.

Despite concerns about accuracy and potential misuse, facial recognition technology seems poised for a surge in popularity. California-based restaurant CaliExpress by Flippy now allows customers to pay for their meals with a simple scan of their face, showcasing the potential of facial payment technology.

Oh boy, I can’t wait to be charged for someone else’s meal because they look just enough like me to trigger a payment.

I have an identical twin. This stuff is going to cause so many issues even if it worked perfectly.

Sudden resurgence of the movie “Face Off”

Hell yeah brother!

I don’t see this as a negative.

Not uh! All you and your twin have to do is write the word Twin on your forehead every morning. Just make sure to never commit a crime with it written where your twin puts their sign. Or else, you know… You might get away with it.

Nope no obvious problems here at all!

Ok, some context here from someone who built and worked with this kind tech for a while.

Twins are no issue. I’m not even joking, we tried for multiple months in a live test environment to get the system to trip over itself, but it just wouldn’t. Each twin was detected perfectly every time. In fact, I myself could only tell them apart by their clothes. They had very different styles.

The reality with this tech is that, just like everything else, it can’t be perfect (at least not yet). For all the false detections you hear about, there have been millions upon millions of correct ones.

Twins are no issue. Random ass person however is. Lol

Yes, because like I said, nothing is ever perfect. There can always be a billion little things affecting each and every detection.

A better statement would be “only one false detection out of 10 million”

You want to know a better system?

What if each person had some kind of physical passkey that linked them to their money, and they used that to pay for food?

We could even have a bunch of security put around this passkey that makes it’s really easy to disable it if it gets lost or stolen.

As for shoplifting, what if we had some kind of societal system that levied punishments against people by providing a place where the victim and accused can show evidence for and against the infraction, and an impartial pool of people decides if they need to be punished or not.

100%

I don’t disagree with a word you said.

FR for a payment system is dumb.

Another way to look at that is ~810 people having an issue with a different 810 people every single day assuming only one scan per day. That’s 891,000 people having a huge fucking problem at least once every single year.

I have this problem with my face in the TSA pre and passport system and every time I fly it gets worse because their confidence it is correct keeps going up and their trust in my actual fucking ID keeps going down

I have this problem with my face in the TSA pre and passport system

Interesting. Can you elaborate on this?

Edit: downvotes for asking an honest question. People are dumb

it can’t be perfect (at least not yet).

Or ever, because it locks you out after a drunken night otherwise.

Or ever because there is no such thing as 100% in reality. You can only add more digits at the end of your accuracy, but it will never reach 100.

In fact, I myself could only tell them apart by their clothes. They had very different styles.

This makes it sound like you only tried one particular set of twins–unless there were multiple sets, and in each set the two had very different styles? I’m no statistician, but a single set doesn’t seem statistically significant.

What I’m saying is we had a deployment in a large facility. It was a partnership with the org that owned the facility to allow us to use their location as a real-world testing area. We’re talking about multiple buildings, multiple locations, and thousands of people (all aware of the system being used).

Two of the employees were twins. It wasn’t planned, but it did give us a chance to see if twins were a weak point.

That’s all I’m saying. It’s mostly anecdotal, as I can’t share details or numbers.

Two of the employees were twins. It wasn’t planned, but it did give us a chance to see if twins were a weak point.

No, it gave you a chance to see if that particular set of twins was a weak point.

With that logic we would need to test the system on every living person to see where it fails.

The system had been tested ad nauseum in a variety of scenarios (including with twins and every other combination you can think of, and many you can’t). In this particular situation, a real-world test in a large facility with many hundreds of cameras everywhere, there happened to be twins.

It’s a strong data point regardless of your opinion. If it was the only one then you’d have a point. But like I said, it was an anecdotal example.

Can you please start linking studies? I think that might actually turn the conversation in your favor. I found a NIST study (pdf link), on page 32, in the discussion portion of 4.2 “False match rates under demographic pairing”:

The results above show that false match rates for imposter pairings in likely real-world scenarios are much higher than those from measured when imposters are paired with zero-effort.

This seems to say that the false match rate gets higher and higher as the subjects are more demographically similar; the highest error rate on the heat map below that is roughly 0.02.

Something else no one here has talked about yet – no one is actively trying to get identified as someone else by facial recognition algorithms yet. This study was done on public mugshots, so no effort to fool the algorithm, and the error rates between similar demographics is atrocious.

And my opinion: Entities using facial recognition are going to choose the lowest bidder for their system unless there’s a higher security need than, say, a grocery store. So, we have to look at the weakest performing algorithms.

My references are the NIST tests.

https://pages.nist.gov/frvt/reports/1N/frvt_1N_report.pdf

That might be the one you’re looking at.

Another thing to remember about the NIST tests is that they try to use a standardized threshold across all vendors. The point is to compare the results in a fair manner across systems.

The system I worked on was tested by NIST with an FMR of 1e-5. But we never used that threshold and always used a threshold that equated to 1e-7, which is orders of magnitude more accurate.

And my opinion: Entities using facial recognition are going to choose the lowest bidder for their system unless there’s a higher security need than, say, a grocery store. So, we have to look at the weakest performing algorithms.

This definitely is a massive problem and likely does contribute to poor public perception.

Thanks for the response! It sounds like you had access to a higher quality system than the worst, to be sure. Based on your comments I feel that you’re projecting the confidence in that system onto the broader topic of facial recognition in general; you’re looking at a good example and people here are (perhaps cynically) pointing at the worst ones. Can you offer any perspective from your career experience that might bridge the gap? Why shouldn’t we treat all facial recognition implementations as unacceptable if only the best – and presumably most expensive – ones are?

A rhetorical question aside from that: is determining one’s identity an application where anything below the unachievable success rate of 100% is acceptable?

Based on your comments I feel that you’re projecting the confidence in that system onto the broader topic of facial recognition in general; you’re looking at a good example and people here are (perhaps cynically) pointing at the worst ones. Can you offer any perspective from your career experience that might bridge the gap? Why shouldn’t we treat all facial recognition implementations as unacceptable if only the best – and presumably most expensive – ones are?

It’s a good question, and I don’t have the answer to it. But a good example I like to point at is the ACLU’s announcement of their test on Amazon’s Rekognition system.

They tested the system using the default value of 80% confidence, and their test resulted in 20% false identification. They then boldly claimed that FR systems are all flawed and no one should ever use them.

Amazon even responded saying that the ACLU’s test with the default values was irresponsible, and Amazon’s right. This was before such public backlash against FR, and the reasoning for a default of 80% confidence was the expectation that most people using it would do silly stuff like celebrity lookalikes. That being said, it was stupid to set the default to 80%, but that’s just hindsight speaking.

My point here is that, while FR tech isn’t perfect, the public perception is highly skewed. If there was a daily news report detailing the number of correct matches across all systems, these few showing a false match would seem ridiculous. The overwhelming vast majority of news reports on FR are about failure cases. No wonder most people think the tech is fundamentally broken.

A rhetorical question aside from that: is determining one’s identity an application where anything below the unachievable success rate of 100% is acceptable?

I think most systems in use today are fine in terms of accuracy. The consideration becomes “how is it being used?” That isn’t to say that improvements aren’t welcome, but in some cases it’s like trying to use the hook on the back of a hammer as a screw driver. I’m sure it can be made to work, but fundamentally it’s the wrong tool for the job.

FR in a payment system is just all wrong. It’s literally forcing the use of a tech where it shouldn’t be used. FR can be used for validation if increased security is needed, like accessing a bank account. But never as the sole means of authentication. You should still require a bank card + pin, then the system can do FR as a kind of 2FA. The trick here would be to first, use a good system, and then second, lower the threshold that borders on “fairly lenient”. That way you eliminate any false rejections while still maintaining an incredibly high level of security. In that case the chances of your bank card AND pin being stolen by someone who looks so much like you that it tricks FR is effectively impossible (but it can never be truly zero). And if that person is being targeted by a threat actor who can coordinate such things then they’d have the resources to just get around the cyber security of the bank from the comfort of anywhere in the world.

Security in every single circumstance is a trade-off with convenience. Always, and in every scenario.

FR works well with existing access control systems. Swipe your badge card, then it scans you to verify you’re the person identified by the badge.

FR also works well in surveillance, with the incredibly important addition of human-in-the-loop. For example, the system I worked on simply reported detections to a SoC (with all the general info about the detection including the live photo and the reference photo). Then the operator would have to look at the details and manually confirm or reject the detection. The system made no decisions, it simply presented the info to an authorized person.

This is the key portion that seems to be missing in all news reports about false arrests and whatnot. I’ve looked into all the FR related false arrests and from what I could determine none of those cases were handled properly. The detection results were simply taken as gospel truth and no critical thinking was applied. In some of those cases the detection photo and reference (database) photo looked nothing alike. It’s just the people operating those systems are either idiots or just don’t care. Both of those are policy issues entirely unrelated to the accuracy of the tech.

Super interesting to read your more technical perspective. I also think facial recognition (and honestly most AI use cases) are best when used to supplement an existing system. Such as flagging a potential shoplifter to human security.

Sadly most people don’t really understand the tech they use for work. If the computer tells them something they just kind of blindly believe it. Especially in a work environment where they have been trained to do what the machine says.

My guess is that the people were trained on how to use the system at a very basic level. Troubleshooting and understanding the potential for error typically isn’t covered in 30min corporate instructional meetings. They just get a little notice saying a shoplifter is in the store and act on that without thinking.

The mishandling is indeed what I’m concerned about most. I now understand far better where you’re coming from, sincere thanks for taking the time to explain. Cheers

This tech (AI detection) or purpose built facial recognition algorithms?

If it works anything like Apple’s Face ID twins don’t actually map all that similar. In the general population the probability of matching mapping of the underlying facial structure is approximately 1:1,000,000. It is slightly higher for identical twins and then higher again for prepubescent identical twins.

Meaning, 8’000 potential false positives per user globally. About 300 in US, 80 in Germany, 7 in Switzerland.

Might be enough for Iceland.

no,people in iceland are so genetically homogeneous, they probably match thanks to everyone being so related

I can already imagine the Tom Clancy thriller where some Joe Nobody gets roped into helping crack a terrorist’s locked phone because his face looks just like the terrorist’s.

Yeah, which is a really good number and allows for near complete elimination of false matches along this vector.

I promise bro it’ll only starve like 400 people please bro I need this

Who’s getting starved because of this technology?

A single mum with no support network who can’t walk into any store without getting physically ejected, maybe?

Let me rephrase it. “Who’s getting suffocated because of gas chambers?”

You’re perfectly OK with 8000 people worldwide being able to charge you for their meals?

No you misunderstood. That is a reduction in commonality by a literal factor of one million. Any secondary verification point is sufficient to reduce the false positive rate to effectively zero.

secondary verification point

Like, running a card sized piece of plastic across a reader?

It’d be nice if they were implementing this to combat credit card fraud or something similar, but that’s not how this is being deployed.

Which means the face recognition was never necessary. It’s a way for companies to build a database that will eventually get exploited. 100% guarantee.

If used as login, together with some other method of access restriction?

Yeah, exactly.

Yeah, people with totally different facial structures get identified as the same person all the time with the “AI” facial recognition, especially if your darker skinned. Luckily (or unluckily) I’m white as can be.

I’m assuming Apple’s software is a purpose built algorithm that detects facial features and compares them, rather than the black box AI where you feed in data and it returns a result. Thats the smart way to do it, but it takes more effort.

people with totally different facial structures get identified as the same person all the time with the “AI” facial recognition

All the time, eh? Gonna need a citation on that. And I’m not talking about just one news article that pops up every six months. And nothing that links back to the UCLA’s 2018 misleading “report”.

I’m assuming Apple’s software is a purpose built algorithm that detects facial features and compares them, rather than the black box AI where you feed in data and it returns a result.

You assume a lot here. People have this conception that all FR systems are trained blackbox models. This is true for some systems, but not all.

The system I worked with, which ranked near the top of the NIST FRVT reports, did not use a trained AI algorithm for matching.

I’m not doing a bunch of research to prove the point. I’ve been hearing about them being wrong fairly frequently, especially on darker skinned people, for a long time now. It doesn’t matter how often it is. It sounds like you have made up your mind already.

I’m assuming that of apple because it’s been around for a few years longer than the current AI craze has been going on. We’ve been doing facial recognition for decades now, with purpose built algorithms. It’s not mucb of leap to assume that’s what they’re using.

I’ve been hearing about them being wrong fairly frequently, especially on darker skinned people, for a long time now.

I can guarantee you haven’t. I’ve worked in the FR industry for a decade and I’m up to speed on all the news. There’s a story about a false arrest from FR at most once every 5 or 6 months.

You don’t see any reports from the millions upon millions of correct detections that happen every single day. You just see the one off failure cases that the cops completely mishandled.

I’m assuming that of apple because it’s been around for a few years longer than the current AI craze has been going on.

No it hasn’t. FR systems have been around a lot longer than Apple devices doing FR. The current AI craze is mostly centered around LLMs, object detection and FR systems have been evolving for more than 2 decades.

We’ve been doing facial recognition for decades now, with purpose built algorithms. It’s not mucb of leap to assume that’s what they’re using.

Then why would you assume companies doing FR longer than the recent “AI craze” would be doing it with “black boxes”?

I’m not doing a bunch of research to prove the point.

At least you proved my point.

You don’t see any reports from the millions upon millions of correct detections that happen every single day. You just see the one off failure cases that the cops completely mishandled.

Obviously. I don’t have much of an issue with it when it’s working properly (although I do still absolutely have an issue with it still). It being wrong and causing issues fairly frequently, and every 5 or 6 months is frequent (this is a low number, just the frequency of it causing newsworthy issues) with it not being deployed widely yet, is a pretty big issue. Scale that up by several orders of magnitude if it’s widely adopted and the errors will be constant.

No it hasn’t. FR systems have been around a lot longer than Apple devices doing FR. The current AI craze is mostly centered around LLMs, object detection and FR systems have been evolving for more than 2 decades… Then why would you assume companies doing FR longer than the recent “AI craze” would be doing it with “black boxes”?

You’re repeating what I said. Apples FR tech is a few years older than the machine learning tech that we have now. FR in general is several decades old, and it’s not ML based. It’s not a black box. You can actually know what it’s doing. I specifically said they weren’t doing it with black boxes. I said the AI models are. Please read again before you reply.

At least you proved my point.

You wrongly assuming what I said, which is actually the opposite of what I said, is the reason I’m not putting in the effort. You’ve made up your mind. I’m not going to change it, so I’m not putting in the effort it would take to gather the data, just to throw it into the wind. It sounds like you are already aware of some of it, but somehow think it’s not bad.

And yet this woman was mistaken for a 19-year-old 🤔

Shitty implementation doesn’t mean shitty concept, you’d think a site full of tech nerds would understand such a basic concept.

Pretty much everyone here agrees that it’s a shitty concept. Doesn’t solve anything and it’s a privacy nightmare.

Well I guess we’re lucky that no one on Lemmy has any power in society.

I think from a purely technical point of view, you’re not going to get FaceID kind of accuracy on theft prevention systems. Primarily because FaceID uses IR array scanning within arm’s reach from the user, whereas theft prevention is usually scanned from much further away. The distance makes it much harder to get the fidelity of data required for an accurate reading.

Yup, it turns out if you have millions of pixels to work with, you have a better shot at correctly identifying someone than if you have dozens.

Shorter answer: physics

I think from a purely technical point of view, you’re not going to get FaceID kind of accuracy on theft prevention systems. Primarily because FaceID uses IR array scanning within arm’s reach from the user, whereas theft prevention is usually scanned from much further away. The distance makes it much harder to get the fidelity of data required for an accurate reading.

This is true. The distance definitely makes a difference, but there are systems out there that get incredibly high accuracy even with surveillance footage.

And a lot of these face recognition systems are notoriously bad with dark skin tones.

Shit even the motion sensors on the automated sinks have trouble recognizing dark skinned people! You have to show your palm to turn the water on most times!

No they aren’t. This is the narrative that keeps getting repeated over and over. And the citation for it is usually the ACLU’s test on Amazon’s Rekognition system, which was deliberately flawed to produce this exact outcome (people years later still saying the same thing).

The top FR systems have no issues with any skin tones or connections.

There are like a thousand independent studies on this, not just one

I promise I’m more aware of all the studies, technologies, and companies involved. I worked in the industry for many years.

The technical studies you’re referring to show that the difference between a white man and a black woman (usually polar opposite in terms of results) is around 0.000001% error rate. But this usually gets blown out of proportion by media outlets.

If you have white men at 0.000001% error rate and black women at 0.000002% error rate, then what gets reported is “facial recognition for black women is 2 times worse than for white men”.

It’s technically true, but in practice it’s a misleading and disingenuous statement.

Edit: here’s the actual technical report if anyone is interested

Would you kindly link some studies backing up your claims, then? Because nothing I’ve seen online has similar numbers to what you’re claiming

https://pages.nist.gov/frvt/reports/1N/frvt_1N_report.pdf

It’s a

481443 page report directly from the body that does the testing.Edit: mistyped the number of pages

Edit 2: as I mentioned in another comment. I’ve read through this document many times. We even paid a 3rd party to verify our interpretations.

It saddens me that you are being downvoted for providing a detailed factual report from an authoritative source. I apologise in the name of all Lemmy for these ignorant people

Thanks! Appreciate it, will take a look when I have time

Fair. But you are asking us to trust your word when you could provide us with some links.

Yep, classic fallacy (? Bias?) of consider relative scales/change over absolute.

Here are some sources that speak about the difference between the two, and how different interpreters of data can use either or to further an argument:

Just go to a restaurant where public figures go and use a photo of their face.

like Deadpool did, with a stapler

Who the fuck wants this…? Besides the company raking in venture capital money.

I have come across a stranger online who looks exactly like me. We even share the same first name. We even live in the same area. I’m so excited for this wonderful new technology…

ಠ_ಠ

Are we assuming there is no pin or any other auth method? That would be unlike any other payment system I’m aware of. I have to fingerprint scan on my phone to use my credit cards even though I just unlocked my phone to attempt it

I’m going to have a field day with this. I’ve got an extremely common-looking face in a major city.

I’ve got an extremely common-looking face in a major city.

Indeed, it’s likely to be a problem, if you stick with committing few or no crimes.

The good news is that, should you choose to commit an above-average number of crimes, then the system will be prone to under-report you.

So that’s nice. /sarcasm, I’m not actually advocating for more crimes. Though I am pointing out that maybe the folks installing these things aren’t incentivising the behavior they want.

I’m advocating more crimes, but unfortunately am still below average.

Well, this blows the “if you’ve not done anything wrong, you have nothing to worry about” argument out of the water.

That argument was only ever made by dumb fucks or evil fucks. The article reports about an actual occurrence of one of the problems of such technology that we (people who care about privacy) have warned about from the beginning.

the way I like to respond to that:

“ok, pull down your pants and hand me your unlocked phone”

I’m stealing this.

And never close a cubicle door

Gotta say, I don’t think Officer Chauvin is going to take well to your request.

The US killed that argument a long time ago. We shot it in the back and claimed it had a gun.

Even if someone did steal a mars-bar… Banning them from all food-selling establishments seems… Disproportional.

Like if you steal out of necessity, and get caught once, you then just starve?

Obviously not all grocers/chains/restaurants are that networked yet, but are we gonna get to a point where hungry people are turned away at every business that provides food, once they are on “the list”?

No no, that would be absurd. You’ll also be turned away if you are not on the list if you’re unlucky.

This becomes even more ridiculous if you consider that we wasted about 1.05 billion tonnes of food worldwide in 2022 alone. (UNEP Food Waste Index Report 2024 Key Messages)

But no. Supermarkets will miss out on profits if they ban people from their stores who can’t pay.

Seems illogical? Because it is.

it’s like a no-fly list, but for food

it’s like a no-fly list, but for being alive

ftfy

get caught once, you then just starve?

Maybe they send you to Australia again?

The world hasn’t changed has it.

Sure it has. They send you to Rwanda now

They’ve essentially created their own privatized law enforcement system. They aren’t allowed to enforce their rules the same way a government would be, but punishment like banning a person from huge swaths of economic life can still be severe. The worst part is that private legal systems almost never have any concept of rights or due process, so there is absolutely nothing stopping them from being completely arbitrary in how they apply their punishments.

I see this kind of thing as being closely aligned with right wingers’ desire to privatize everything, abolish human rights, and just generally turn the world into a dystopian hellscape for anyone who isn’t rich and well connected.

I can see this being used against ex-employees.

Like if you steal out of necessity, and get caught once, you then just starve?

I mean… you could try getting on food stamps or whatever sort of government assistance is available in your country for this purpose?

In pretty much all civilized western countries, you don’t HAVE to resort to becoming a criminal simply to get enough food to survive. It’s really more of a sign of antisocial behavior, i.e. a complete rejection of the system combined with a desire to actively cause harm to it.

Or it could be a pride issue, i.e. people not wanting to admit to themselves that they are incapable of taking care of themselves on their own and having to go to a government office in order to “beg” for help (or panhandle outside the supermarket instead).

If that case ever does exist (god forbid), I hope that there’s something like a free-entry market so they can set up their own food solutions instead of being forced to starve.

If it’s a free market, and every existing business is coordinating to refuse to sell food to this person, then there’s a profit opportunity in getting food to them. You could even charge them double for food, and make higher profits selling to the grocery-banned class, while saving their lives.

That may sound cold-hearted, but what I’m trying to point out is that in this scenario, the profit motive is pulling that food to those people who need it. It’s incentivizing people who otherwise wouldn’t care, and enabling people who do care, to feed those hungry people by channeling money toward the solution.

And that doesn’t require anything specific about food to be in the code that runs the marketplace. All you need is a policy that new entrants to the market are allowed, and without any lengthy waiting process for a permit or whatever. You need a rule that says “You can’t stop other players from coming in an competing with you”, which is the kind of rule you need to run a free market, and then the rest of the problem is solved by people’s natural inclinations.

I know I’m piggybacking here. I’m just saying that a situation in which only some finite cartel of providers gets to decide who can buy food, is an example of a massive violation of free market principals.

People think “free market” means “the market is free to do evil”. No. “Free market” just means the people inside it are free to buy and sell what they please, when they please.

Yes it means stores can ban people. But it also means other people can start stores that do serve those people. It means “I don’t have to deal with you if I don’t want to, but I also can’t control your access to other people”.

A pricing cartel or a blacklisting cartel is a form of market disease. The best prevention and cure is to ensure the market is a free one - one which new players can enter at will - which means you can’t enforce that cartel reliably since there’s always someone outside the blacklisting cartel who could benefit from defecting from the blacklist.

That is some serious “capitalism can solve anything and therefore will, if only we let it”-type brain rot.

This “solution” relies on so many assumptions that don’t even begin to hold water.

Of course any utopian framework for society could deal with every conceivable problem… But in practice they don’t, and always require intentional regulation to a greater or lesser extent in order to prevent harm, because humans are humans.

This particular potential problem is almost certainly not the kind that simply “solves itself” if you let it.

And IMO suggesting otherwise is an irresponsible perpetuation of the kind of thinking that has led human civilization to the current reality of millions starving in the next few decades, due to the predictable environmental destruction of arable land in the near future.

Wtf kind of Randian hellscape nonsense is this? They should be allowed to charge double to exploit people who are already disadvantaged by the way other companies are treating them? Fuck this nonsense.

Go create your own bear-infested village somewhere nobody with any morals has to live near you. But this time do it from scratch rather than ruining things for the people already living there.

Stop giving corporations the power to blacklist us from life itself.

you will sit down and be quiet, all you parasites stifling innovation, the market will solve this, because it is the most rational thing in existence, like trains, oh god how I love trains, I want to be f***ed by trains.

~~Rand

I can see the Invisible Hand of the Free Market, it’s giving me the finger.

Right up your ass, no less.

It charged me for lube, and I thought about paying for it, but same-day shipping was a bitch and a half… I tried second class mail, but I think my bumhole would have stretched enough for this to stop hurting before it got anywhere near close to here so I just opted for that.

Can it give invisible hand jobs?

Yes, but they’re in the “If you have to ask, you couldn’t afford it in three lifetimes.” price range

Darn!

The whole wide world of authors who have written about the difficulties of this new technological age and you choose the one who had to pretend her work was unpopular

go read rand, her books literally advocate for an anti-social world order, where the rich and powerful have the ability to do whatever they want without impediment as the “workers” are described as parasites that should get in line or die

Are you suggesting they shouldn’t be allowed to ban people from stores? The only problem I see here is misused tech. If they can’t verify the person, they shouldn’t be allowed to use the tech.

I do think there need to be reprocussions for situations like this.

Well there should be a limited amount of ability to do so. I mean there should be police reports or something at the very least. I mean, what if Facial Recognition AI catches on in grocery stores? Is this woman just banned from all grocery stores now? How the fuck is she going to eat?

That’s why I said this was a misuse of tech. Because that’s extremely problematic. But there’s nothing to stop these same corps from doing this to a person even if the tech isn’t used. This tech just makes it easier to fuck up.

I’m against the use of this tech to begin with but I’m having a hard time figuring out if people are more upset about the use of the tech or about the person being banned from a lot of stores because of it. Cause they are separate problems and the latter seems more of an issue than the former. But it also makes fucking up the former matter a lot more as a result.

I wish I could remember where I saw it, but years ago I read something in relation to policing that said a certain amount of human inefficiency in a process is actually a good thing to help balance bias and over reach that could occur when technology could technically do in seconds what would take a human days or months.

In this case if a person is enough of a problem that their face becomes known at certain branches of a store it’s entirely reasonable for that store to post a sign with their face saying they are aren’t allowed. In my mind it would essentially create a certain equilibrium in terms of consequences and results. In addition to getting in trouble for stealing itself, that individual person also has a certain amount of hardship placed on them that may require they travel 40 minutes to do their shopping instead of 5 minutes to the store nearby. A sign and people’s memory also aren’t permanent, so it’s likely that after a certain amount of time that person would probably be able to go back to that store if they had actually grown out of it.

Or something to that effect. If they steal so much that they become known to the legal system there should be processes in place to address it.

And even with all that said, I’m just not that concerned with theft at large corporate retailers considering wage theft dwarfs thefts by individuals by at least an order of magnitude.

Reddit became a ban-happy wasteland, and if the tides swing a similar way, we’ll see a society where Big Tech gates people out of the very foundation of Modern Society. It’s exclusion that I’m against.

This can’t be true. I was told that if she has nothing to hide she has nothing to worry about!

Not the first time facial recognition tech has been misused, and certainly won’t be the last. The UK in particular has caught a lotta flak around this.

We seem to have a hard time connecting the digital world to the physical world and realizing just how interwoven they are at this point.

Therefore, I made an open source website called idcaboutprivacy to demonstrate the importance—and dangers—of tech like this.

It’s a list of news articles that demonstrate real-life situations where people are impacted.

If you wanna contribute to the project, please do. I made it simple enough to where you don’t need to know Git or anything advanced to contribute to it. (I don’t even really know Git.)

From your webpage: Privacy because protects our freedom to be who we are.

I think a word is missing in that sentence.

I’ll link your site on my personal website, which has a link collection. Seems cool.

What a great idea for a page. People are becoming blase about privacy even though it’s still important.

This is why some UK leaders wanted out of EU, to make their own rules with way less regard for civil rights.

It’s the Tory way. Authoritarianism, culture wars, fucking over society’s poorest.

nah i think main thing was a super fragile identity. i mean they have been shit all the time since before EU. when talks between france,germany and uk took place the insisted to take control of EU.

if you live on an island for generations with limited new genetic input…well, thats where you end up.

We humans have these things called “boats” that have enabled the British Isles to receive regular inputs of new genetic material. Pretty useful things, these boats, and somewhat pivotal in the history of the UK.

sure

I don’t understand the tendency to attribute harmful behaviours of the rich and powerful to these strange, irrational reasons. No, UK leaders didn’t spend millions upon millions on propaganda because they have a fragile identity. They did it because they’ll make money off of it, and will be able to move the legislation towards their own goals.

It’s the same when people say Putin invaded Ukraine because he wants to restore the glory of the Soviet Union. No, he doesn’t care about any of that, he cares about staying in power and becoming more powerful. One of the best ways to do so is to invade other countries, as long as you don’t lose.

It’s the same when people say Putin invaded Ukraine because he wants to restore the glory of the Soviet Union. No, he doesn’t care about any of that, he cares about staying in power and becoming more powerful. One of the best ways to do so is to invade other countries, as long as you don’t lose.

Thank you. I see so many people who don’t get it. I’m happy some people understand it without sending them link to one of few Ekaterina Shulman’s lectures in English.

Thank you for the validation, sometimes I feel like I’m going crazy with how often these things are repeated.

But those lectures do sound interesting - would you mind linking them when you have the time?

This is not the lecture I originally intended to post. Also small correction for 1:00:02 first answer in poll should be translated as “social fainess”.

If you find lecture where she says about “dealing with internal problems by external means” and “dropping concrete slab on nation’s head” - that is one I intended to link, but still searching which one it is.

Awesome, thank you!

if you live on an island for generations with limited new genetic input…well, thats where you end up.

Literally the most diverse country in Europe lol

Nah the core drivers wanted their own little neoliberal haven where they didn’t have to listen to the EU. They’d have been rich either way, but this way they get more power.

Even if she were the shoplifter, how would that work? “Sorry mate, you shoplifted when you were 16, now you can never buy food again.”?

Minority Report vibes…

Facial recognition still struggles with really bad mistakes that are always bad optics for the business that uses it. I’m amazed anyone is still willing to use it in its current form.

It’s been the norm that these systems can’t tell the difference between people of dark pigmentation if it even acknowledges it’s seeing a person at all.

Running a system with a decade long history or racist looking mistakes is bonkers in the current climate.

The catch is that its only really a problem for the people getting flagged. Then you’re guilty until proven innocent, and the only person to blame is a soulless machine with a big button that reads “For customer support, go fuck yourself”.

As security theater, its cheap and easy to implement. As a passive income stream for tech bros, its a blank check. As a buzzword for politicians who can pretend they’re forward-thinking by rolling out some vaporware solution to a non-existent problem, its on the tip of everyone’s tongue.

I’m old enough to remember when sitting Senator Ted Kennedy got flagged by the Bush Admin’s No Fly List and people were convinced this is the sort of shit that would reform the intrusive, incompetent, ill-conceived TSA. 20 years later… nope, it didn’t. Enjoy techno-hell, Brits.

I’m curious how well the systems can differentiate doppelgangers and identical twins. Or if some makeup is enough to fool it.

Facial recognition uses a few key elements of the face to hone in on matches, and traditional makeup doesn’t obscure any of those areas. In order to fool facial recognition, the goal is often to avoid face detection in the first place; Asymmetry, large contrasting colors, obscuring one (or both) eyes, hiding the oval head shape and jawline, and rhinestones (which sparkle and reflect light nearly randomly, making videos more confusing) seem to work well. But as neural nets improve, they also get harder to fool, so what works for one system may not work for every system.

CV Dazzle (originally inspired by dazzle camouflage used on some warships) is a makeup style that tries to fool the most popular facial recognition systems.

Note that those tend to obscure the bridge of the nose, the brow line, the jawline, etc… Because those are key identification areas for facial recognition.

Functional and subversive, just how I like my makeup

Yeah, if we can still recognize those as faces, it’s possible for a neural net to do so as well.

But I’m talking more about differentiating faces than hiding entirely from such systems. Like makeup can be used to give the illusion that the shape of the face is different with false contour shading. You can’t really change the jawline (I think… I’m not skilled in makeup myself but have an awareness of what it can do) but you can change where the cheekbones appear to be, as well as the overall shape of the cheeks, and you can adjust the nose, too (unless it’s a profile angle).

I think the danger in trying to hide that you have a face entirely is that if it gets detected, there’s a good chance that it will be flagged for attempting to fool the system because those examples you gave are pretty obvious, once you know what’s going on.

It would be like going in to a bank with a ski mask to avoid being recognized vs going in as Mrs Doubtfire. Even if they are just trying to do banking that one time, the ski mask will attract unwanted attention while using a different face would accomplish the goal of avoiding attention.

You make it sound like that’s a bad thing.

Can’t wait for something like this get hacked. There’ll be a lot explaining to do.

Still, I think the only way that would result in change is if the hack specifically went after someone powerful like the mayor or one of the richest business owners in town.

I read this in a Ricky Ricardo voice.

Oh good… janky oversold systems that do a lot of automation on a very shaky basis are also having high impacts when screwing up.

Also “Facewatch” is such an awful sounding company.

Cara Filter wouldn’t be bad, just for the ring if it

The developers should be looking at jail time as they falsely accused someone of commiting a crime. This should be treated exactly like if I SWATed someone.

I get your point but totally disagree this is the same as SWATing. People can die from that. While this is bad, she was excluded from stores, not murdered

You lack imagination. What happens when the system mistakenly identifies someone as a violent offender and they get tackled by a bunch of cops, likely resulting in bodily injury.

That would then be an entirely different situation?

I mean, the article points out that the lady in the headline isn’t the only one who has been affected; A dude was falsely detained by cops after they parked a facial recognition van on a street corner, and grabbed anyone who was flagged.

That’s not very reassuring, we’re still only one computer bug away from that situation.

Presumably she wasn’t identified as a violent criminal because the facial recognition system didn’t associate her duplicate with that particular crime. The system would be capable of associating any set of crimes with a face. It’s not like you get a whole new face for each different possible crime. So, we’re still one computer bug away from seeing that outcome.

No, it wouldn’t be. The base circumstance is the same, the software misidentifying a subject. The severity and context will vary from incident to incident, but the root cause is the same - false positives.

There’s no process in place to prevent something like this going very very bad. It’s random chance that this time was just a false positive for theft. Until there’s some legislative obligation (such as legal liability) in place to force the company to create procedures and processes for identifying and reviewing false positives, then it’s only a matter of time before someone gets hurt.

You don’t wait for someone to die before you start implementing safety nets. Or rather, you shouldn’t.

This happens in the USA without face recognition

Mike judge calling it out again https://youtu.be/5d7SaO0JAHk?si=rieJnFE0YHd-_3lY

Here is an alternative Piped link(s):

https://piped.video/5d7SaO0JAHk?si=rieJnFE0YHd-_3lY

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

People should be thrown in jail over a hypothetical?

In the UK at least a SWATing would be many many times more deadly and violent than a normal police interaction. Can’t make the same argument for the USA or Russia, though.

Difference in degree not kind

I’m not so sure the blame should solely be placed on the developers - unless you’re using that term colloquially.

Developers were probably the first people to say that it isn’t ready. Blame the sales people that will say anything for money.

It’s impossible to have a 0% false positive rate, it will never be ready and innocent people will always be affected. The only way to have a 0% false positive rate is with the following algorithm:

def is_shoplifter(face_scan):

return Falseline 2 return False ^^^^^^ IndentationError: expected an indented block after function definition on line 1In their defense, it didn’t return a false positive

Weird, for me the indentation renders correctly. Maybe because I used Jerboa and single ticks instead of triple ticks?

Interesting. This is certainly not the first time there have been markdown parsing inconsistencies between clients on Lemmy, the most obvious example being subscript and superscript, especially when ~multiple words~ ^get used^ or you use ^reddit ^style ^(superscript text).

But yeah, checking just now on Jerboa you’re right, it does display correctly the way you did it. I first saw it on the web in lemmy-ui, which doesn’t display it properly, unless you use the triple backticks.

They worked on it, they knew what could happen. I could face criminal charges if I do certain things at work that harm the public.

I have no idea where Facewatch got their software from. The developers of this software could’ve been told their software will be used to find missing kids. Not really fair to blame developers. Blame the people on top.

It says right on their webpage what they are about.

Developers don’t always work directly for companies. Companies pivot.

You can arrest their managers as well, good point.

We have so many dystopian futures and we decided to invent a new one.

Actually this one feels pretty similar to watch_dogs. Wasn’t this the plot to watch_dogs 2?

Now I’m interested in the plot of watch dogs 2…

Edit: it’s indeed the plot of watch dogs 2

https://en.m.wikipedia.org/wiki/Watch_Dogs_2

In 2016, three years after the events of Chicago, San Francisco becomes the first city to install the next generation of ctOS – a computing network connecting every device together into a single system, developed by technology company Blume. Hacker Marcus Holloway, punished for a crime he did not commit through ctOS 2.0 …

Also, a kickass soundtrack by Hudson Mohawke.

As an American, I don’t know what opinion to have about this without knowing the woman’s race.

Literally what people on Lemmy think.

If she’s been flagged as shoplifter she’s probably black!

Why, because all shoplifters are black? I don’t understand. She’s being mistaken for another person, a real person on the system.

I used to know a smackhead that would steal things to order, I wonder if he’s still alive and whether he’s on this database. Never bought anything off him but I did buy him a drink occasionally. He’d had a bit of a difficult childhood.

Because facial recognition systems infamously cannot tell black people apart.

i think its more that all facial recognition systems have a harder time picking up faces with darker complexion (same idea why phone cameras are bad at it for example). this failure leads to a bunch of false flags. its hilariously evident when you see police jurisdictions using it and aresting innocent people.

not saying the womans of colored background, but would explain why it might be triggering.

Because the algorithm trying to identify shoplifters is probably trained on biased dataset

That’s not how it works! It has mistakenly identified her as a specific individual who was already convicted of theft.

Why, because all shoplifters are black?

All the ones that get caught. When you’re white, you can steal whatever you want.

Congratulations, you just identified yourself as a racist and need to understand you can’t just judge someone without first getting to know them.