Google has been literally unusable for search for years.

It’s been worse and worse over time for whatever reasons, but the AI summary at the top now can be way off. I had a result the other day where a quick glance (all that I give it as I scroll down to any results) I laughed because I could tell it was totally wrong, and couldn’t even figure out where it got that result from. It wasn’t in the results I found.

My favourite (inconsequential, but incredibly stupid) automatic AI question/answer from Google :

I was looking for German playwright Brecht’s first name. The answer was Bertolt. It’s a pretty simple question, so that at least was correct.

However, among the initial “frequently asked questions”, one was “What is the name of the Armored Titan?”

Somehow Google decided it would randomly answer a question about manga/anime Attack on Titan in there. The only link between that question and my query is the answer, Bertolt (so of course, it wasn’t in my query). Because there’s a guy called Bertolt too in that story.

By the way, Attack on Titan’s Bertolt is not the armoured titan.

Or if you are set on using AI Overviews to research products, then be intentional about asking for negatives and always fact-check the output as it is likely to include hallucinations.

If it is necessary to fact check something every single time you use it, what benefit does it give?

None. None at all.

None. It’s made with the clear intention of substituting itself to actual search results.

If you don’t fact-check it, it’s dangerous and/or a thinly disguised ad. If you do fact-check it, it brings absolutely nothing that you couldn’t find on your own.

Well, except hallucinations, of course.

It might be able to give you tables or otherwise collated sets of information about multiple products etc.

I don’t know if Google does, but LLMs can. Also do unit conversions. You probably still want to check the critical ones. It’s a bit like using an encyclopedia or a catalog except more convenient and even less reliable.

You can do unit conversions with powertoys on windows, spotlight on mac and whatever they call the nifty search bar on various Linux desktop environments without even hitting the internet with exactly the same convenience as an llm. Doing discrete things like that with an llm inference is the most inefficient and stupid way to do them.

All things were doable before. The point is that they were manual extra steps.

They weren’t though. You put stuff in the search bar and it detected you were asking about unit conversion and gave you an answer, without ever involving an llm. Are you being dense on purpose?

It hasn’t stopped anyone from using ChatGPT, which has become their biggest competitor since the inception of web search.

So yes, it’s dumb, but they kind of have to do it at this point. And they need everyone to know it’s available from the site they’re already using, so they push it on everyone.

No, they don’t have to use defective technology just becsuse everyone else is.

Yes. They do.

I am seeing more and more people trusting that “zero-click search” result without looking for any kind of source or discussion around their information. Honestly, it’s scary.

Yeah the article is important. Not for the likes of us, but most people around us. I hope they do read it or the info somehow trickles through.

- Google is shitty

- LLMs are shitty

Combine the 2:

oh no who would have thought it would come to this

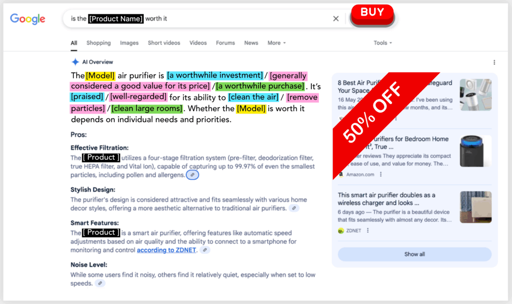

Don’t bother asking Google if a product is worth it; it will likely recommend buying whatever you show interest in—even if the product doesn’t exist.

This seems like a general problem with these LLMs. Sometimes when I’m programming I ask the AI what it thinks about how I propose to approach some design issue or problem. It pretty much always encourages me to do what I proposed to do, and tells me it’s a good approach. So I’m using it less and less because it seems the LLMs are encouraged to agree with the user and sound positive all the time. I’m fairly sure my ideas aren’t always good. In the end I’ll be discovering the pitfalls for myself with or without time wasted asking the LLM.

The same thing seems to happen when people try to use an LLM as a therapist. The LLM is overly encouraging and agreeable, and it sends people down deep rabbit holes of delusion.

Didn’t take as long as I expected, but I expected it (which is why I didn’t bother to read, even if it’s not all the way there, it’s coming).

Seriously, advertising (or propaganda to use the older name favoured by Goebbels) really needs to be seen as a much more serious enemy than most do. Propaganda for capitalists is super effective at sucking up peoples mental bandwidth, they’ve been selecting for it going on a century now and they’re depressingly good at it, if you don’t actively counter it, straight to the subconscious, along with all the background crap in it. /rant, but seriously…

The part about Reddit communities being built now that contain only Ai questions, Ai answers, and links to products is what I figured Spez wanted when he ipo’d. And with Ai writing convincing text, it’s so easy!

The Reddit team is developing a bot that can post “this”.

They’re building a datacenter full of nvidia hardware for it.

Working as designed.

Was trying to find info about a certain domain the other day - damn near impossible just cause all the results I could find was the same type of AI slop.

garbage in, garbage out.

Spam in, spam out, profit in the middle.

An advertising company are advertising at you? Whatever next?

This isn’t much of a change. Before AI it was SEO slop. Search for product reviews and you get a bunch of pages “reviewing” products by copying the amazon description and images.

Not surprising

I do not know how to even shop anymorem All the name brands I know have gotten worse, most of the new brands are unknown and have AI reviews.