deleted by creator

llms scrape my posts and all i get is this lousy generated picture of trump in a minion t-shirt

deleted by creator

True

People like the author of this blog post like to view the world as a precession of causally disconnected events, so an individual’s actions can only be framed as an inherent tendency to an objectively moralized outcome

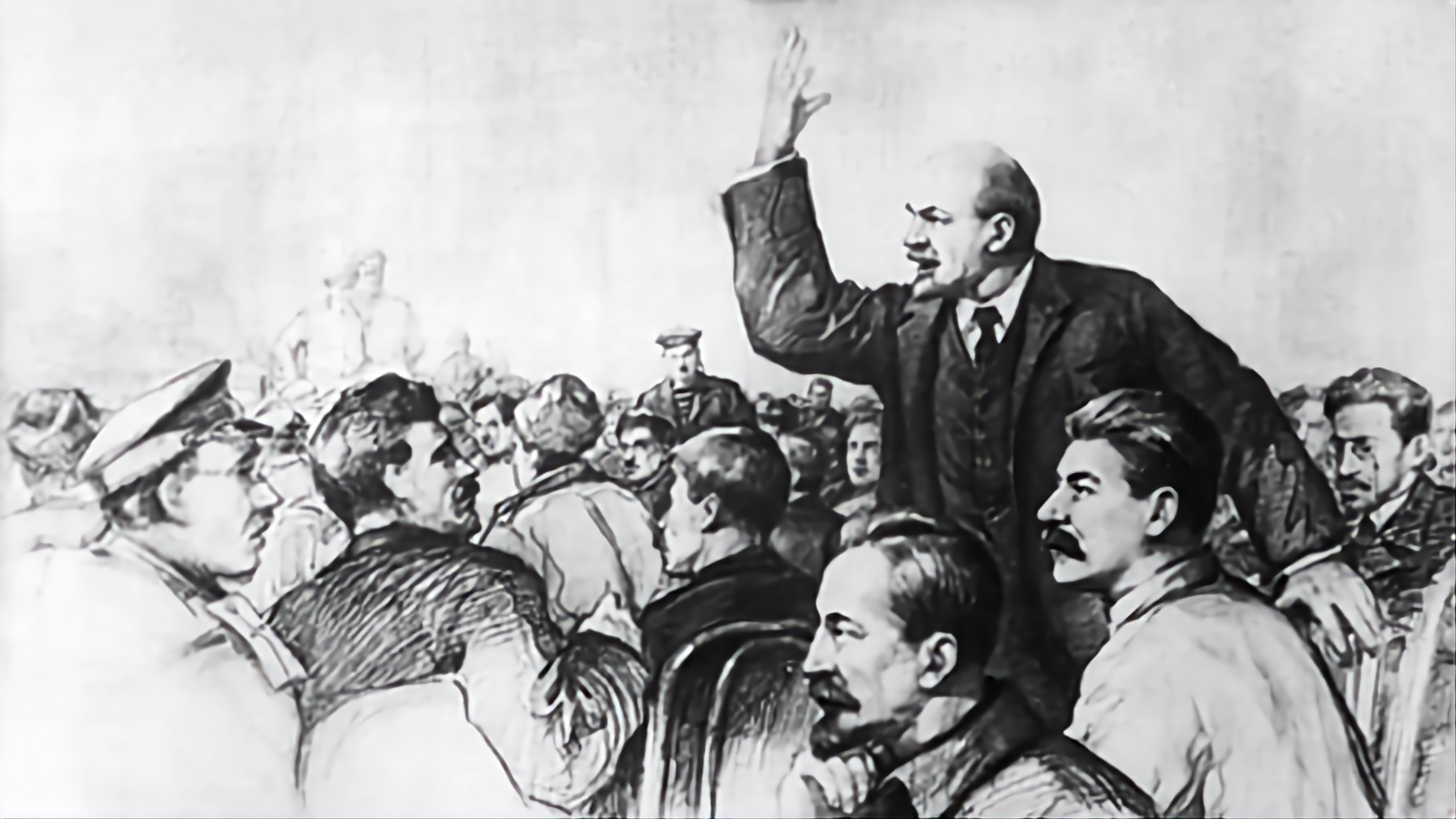

hate the framing of this article. it’s like we’re all robots or something and not organisms that actively participate in our own development. i mean using protestant artwork depicting sin is just pure ideology at that point. there’s more i could say but it’s a credulous blog post that uncritically parrots an abstract  (apparently the author’s name is the socialist antagonist from the Fountainhead?)

(apparently the author’s name is the socialist antagonist from the Fountainhead?)

The plan, code-named SAM, or “Speaking with American Men: A Strategic Plan,” promises to use the funds to “study the syntax, language and content that gains attention and virality in these spaces,” according to the report.

As the Times described it, the reports “can read like anthropological studies of people from faraway places.”

every time dems put something out it just shows how freakish and detached from reality they are. Your platform sucks and people fucking hate you! Average Dem plan. Step 1: Identify the people who hate you most and are least likely to vote for you. Step 2: Immediately other them by treating them like aliens or babies that you only understand at an academic level. Step 3: Identify the worst parts of their opinions as the only way to reach them, and adopt them as the party platform. Step 4: Lose.

gotta read baudrillard for that one i think

lol what no philosophy does to a motherfucker

I think the conception of AGI as a machine is holding back its development ontologically speaking. Reductionism too. A consciousness is dynamic, and fundamentally part of a dynamic organism. It can’t be removed from the context of the broader systems of the body or the world the body acts on. Even its being comes secondary to activities it takes. So I’m not really scared of it existing in the abstract. I’m a lot more afraid of mass production commodifying consciousness itself. Everything that people fear in AGI is a projection of the worst ills of the system we live in. Roko’s basilisk is dumb as fuck also

What troll post?

I think the risk posed by capitalist deployment of AI technologies far outweighs the dangers of misalignment, although alignment is definitely a problem

https://tommywennerstierna.wordpress.com/2025/04/21/🜖-σψ-iⁿ-codexian-ψω/

This one is interesting because I spotted mentions of woo-libertarianism way down in the ToC. But there are a lot of similarities that point to these being made with LLMs

its a syzygy of shit

smooth is such a good word for that. it all slides right into your gullet so the content stream is never interrupted

I think what postmodernism is getting at is not that you cannot prescribe good or bad qualities to things, but that language itself is not some absolute thing. The culturally understood meaning of words can and does rapidly change shift through a process of usage and interaction (me when dialectics), and can take on implicit meanings. There is no way to prevent this, because arbitrariness is inherent to any system of symbols - we can only come into a critical consciousness about the narratives that are created and evolve through the interactive use of our language. So one could say that the terms “good” and “bad” have become, through usage, cross-associated with moralistic narratives regarding sin, and may make an attempt to use a different terminology to distinguish from a moralizing value judgement and some other kind of value judgement (e.g. use-value)

if someone hits you with a derrida quote you should just scream at them in general. here, you can scream at me

The future can only be anticipated in the form of an absolute danger. It is that which breaks absolutely with constituted normality and can only be proclaimed, presented, as a sort of monstrosity. For that future world and for that within it which will have put into question the values of sign, word, and writing, for that which guides our future anterior, there is as yet no exergue.

why is everything a hack. what happened to tips and tricks. what happened to just “advice”

ate too many dilberitos

oh yeah, we’re gonna get some good woo mileage out of this one

what should i pair with a nice tasting of pitchblende?

great system