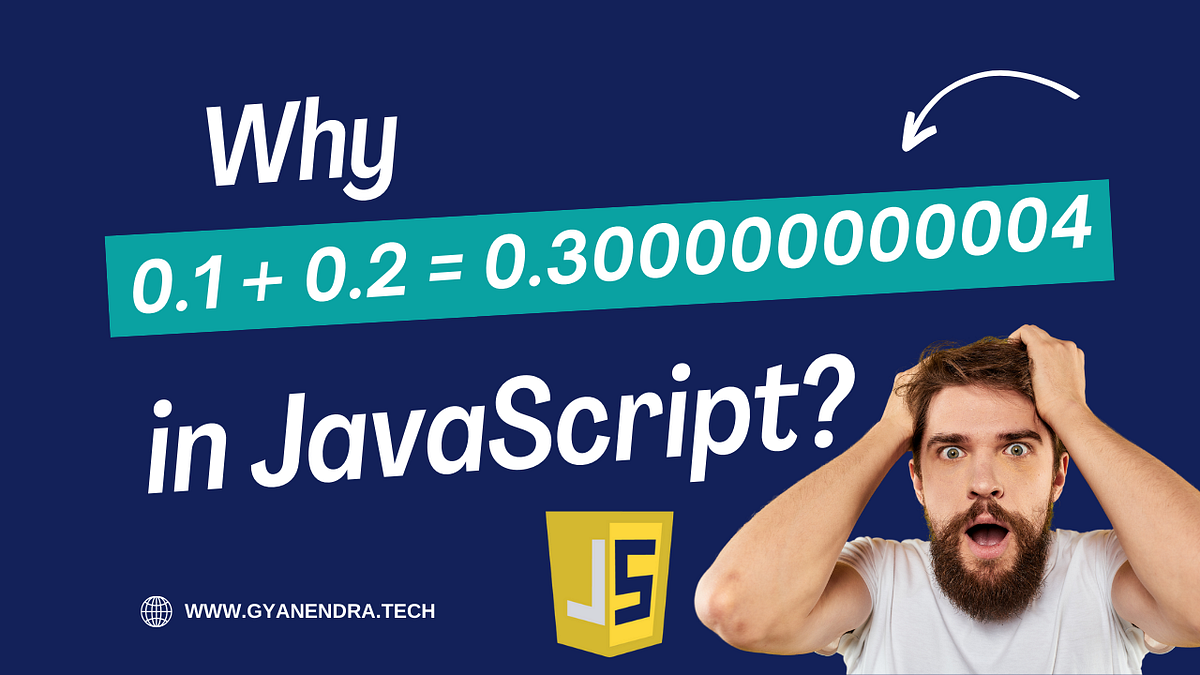

Have you performed simple arithmetic operations like 0.1 + 0.2? You might have gotten something strange: 0.1 + 0.2 = 0.30000000000000004.

Floating-point arithmetic is important to understand at least vaguely since it’s a pretty leaky abstraction. Fortunately, we don’t need a “✨Member-only story” on Medium to get acquainted with the underlying concepts.

It also includes a non member link

Ugh, i thought this was a question, not a link. So i spent time googling for a good tutorial on floats (because I didn’t click the link)…

Now i hate myself, and this post.

Don’t hate yourself. At least you searched it properly. See it this way, you learned from a failure more than anyone who did not fail. You are now stronger!

JavaScript is truly a bizarre language - we don’t need to go as far as arbitrary-precision decimal, it does not even feature integers.

I have to wonder why it ever makes the cut as a backend language.

Popularity and ease of use I guess.

The JavaScript Number type is implemented as an IEEE 754 double and as such any integer between -253 and 253 are represented without loss of precision. I can’t say I’ve ever missed explicitly declaring a value as an integer in JS. It’s dynamically typed anyways. There’s the languages people complain about and the ones nobody uses.

And then JSON doesn’t restrict numbers to any range or precision; and at least when I deal with JSON values, I feel the need to represent them as a BigDecimal or similar arbitrary precision type to ensure I am not losing information.

I hope you work in a field where worrying about your integers hitting larger values than 9 quadrillion is justified.

Could be a crypto key, or a randomly distributed 64-bit database row ID, or a memory offset in a stack dump of a 64 bit program

It’s how CPUs do floating point calculations. It’s not just javascript. Long story short, a float is stored in the format of one bit for the +/-, some bits for a base value (mantissa), and some bits for the exponent. As a result, some numbers aren’t quite representable exactly.

A good way to think of it is to compare something similar in decimal. .1 and .2 are precise values in decimal, but can’t be represented as perfectly in binary. 1/3 might be a pretty good similar-enough example. With a lack of precision, that might become 0.33333333, which when added in the expression 1/3 + 1/3 + 1/3 will give you 0.99999999, instead of the correct answer of 1.

Python has no issues representing

1/3 + 1/3 + 1/3as 1. I just opened a python interpreter, imported absolutely no libraries and typed

1/3 + 1/3 + 1/3enter and got 1 as the result. Seems like if python could do that, JavaScript should be able to as well.I thought it was a rather simple analogue, but I guess it was too complicated for some?

I said nothing about JavaScript or Python or any other language with my 1/3 example. I wasn’t even talking about binary. It was an example of something that might be problematic if you added numbers in an imprecise way in decimal, the same way binary floating point fails to accurately represent 1/10 + 1/5 from the OP.

If you are adding 0.1 + 0.2, then it means you can cut off anything after the first digit (after the dot off course). Because the rest of the 0.1 is only 0 and the rest of 0.2 is 0. That can help with rounding errors on floating point calculations. I don’t program JavaScript, so no idea what the best way to go about it would be.

How would you implement this in code?

I don’t have much JavaScript experience, but maybe

.toFixed()will help here. Playground (copy the below code to the playground to test): https://playcode.io/javascriptconst number = 0.1 + 0.2 const fixed = number.toFixed(3) // Update header text document.querySelector('#header').innerHTML = message // Log to console console.log(number) console.log(fixed)outputs:

0.30000000000000004 0.300