- cross-posted to:

- technews@radiation.party

- cross-posted to:

- technews@radiation.party

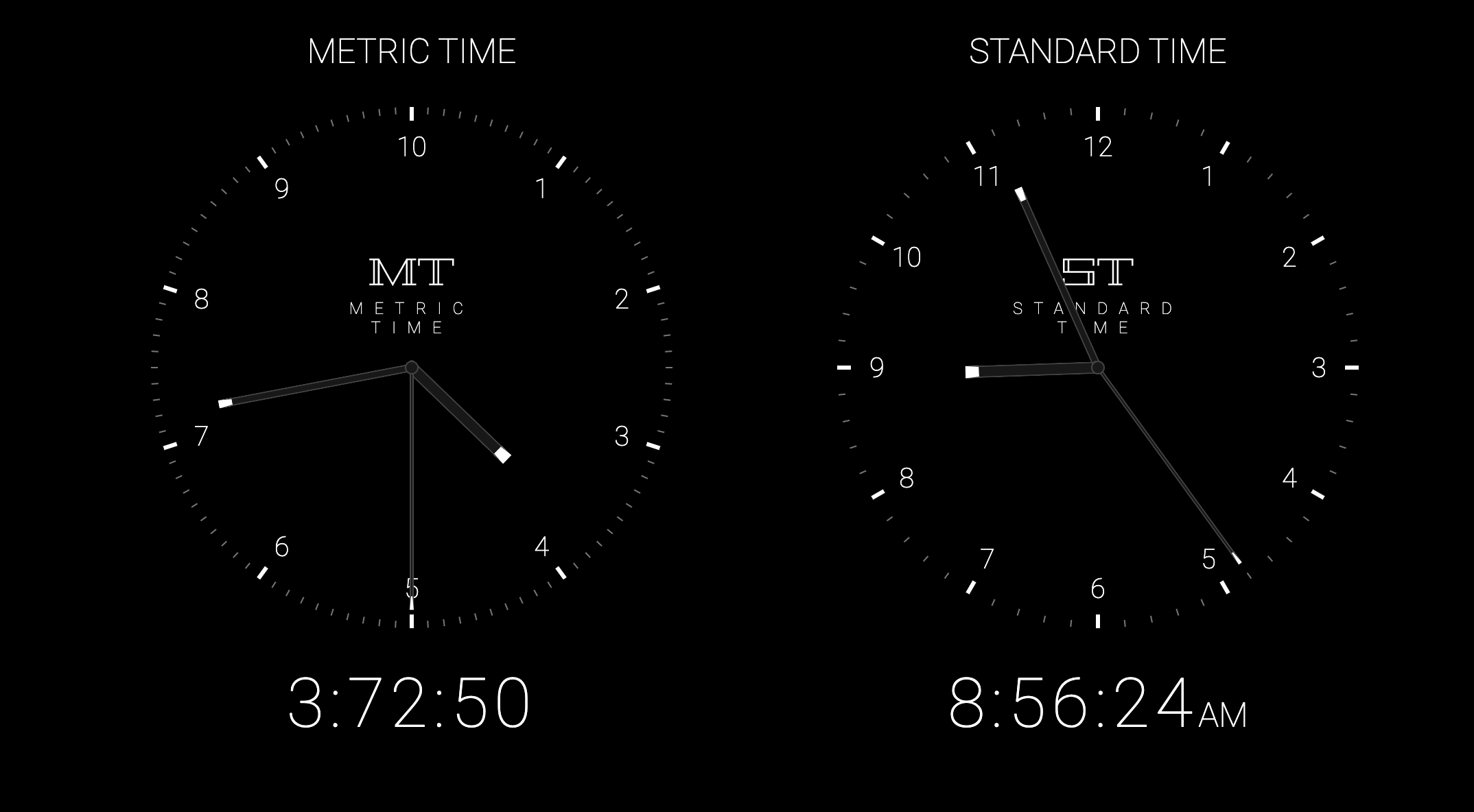

What do you think?

You can read more here: https://en.wikipedia.org/wiki/Metric_time

Neat but holy good fucking god the amount of programming it would take if it was ever decided to change this going forward, not to mention how historical times would be referenced. Datetime programming is already such a nightmare.

I sit in a cubicle and I update bank software for the

2000metric switch.

Lol. Seriously though, for something like this these days, it will be interesting to see what happens given we will have to face the year 2038 problem. This kind of thing was still doable for the 2000 switch because of the relatively small number of devices/softwares, but because of the number of devices and softwares now, let alone in 2038, I really have no idea how it’s going to be managed.

What is left to say. The Babylonians made a wise choice.

We need 6 fingers and switch to base12 math.

Nah. Just start counting on your fingers. 123 on the index, 456 on the middle, 789 on the ring, and the rest on your pinkie. Its based on the segments of each finger, and it’s how Mayans used to count.

I mean, you already have 12 phalanges on one hand (3 each, from 4 fingers) and you can use your thumb as an indicator.

And the other hand to count up to 5 dozens, aka 60

Who over the age of 6 counts on their fingers

This feels like an April fools joke.

Ridiculous concept. If you can’t do the math, get an app or ask an adult.

Maybe I’m not understanding this right. A quick google search shows that there is 86 400 seconds in a day. With metric time, an hour is 10 000 seconds. That means that a day would be 8.6 hours, but on this clock it’s 10? How does that work?

One metric second != one (conventional) second

So it’s not using the SI second? That’s a bit weird

I guess it could make sense. Reading a bit more and it looks like the second is defined as a fraction (1/86400) of a day. Using 1/100000 wouldn’t be tgat crazy. But more than just fucking up all our softwares and time-measuring tools, that would also completely change a lot of physics/chemistry formulas (or constants in these formulas ti be more precise). Interesting thought experiment, but i feel that particularly changing the definition of a second would affect soooo mucchhh.

Now I finally know how it feels to be a real American

For once I hate metric (time)

The advantage of 12 and 60 is that they’re extremely easy to divide into smaller chunks. 12 can be divided into halves, thirds, and fourths easily. 60 can be divided into halves, thirds, fourths, and fifths. So ya, 10 isn’t a great unit for time.

I don’t understand how its any easier than using 100 and dividing…

1/2 an hour is 30 min 1/2 an hour if metric used 100 is 50 min

1/4 an hour is 15 min 1/4 an hour metric is 25 min

Any lower than that and they both get tricky…

1/8 an hour is 7.5 min 1/8 an hour metric is 12.5 min

Getting used to metric time would be an impossible thing to implement worldwide I reckon, but I struggle to understand how its any less simple than the 60 min hour we have and the 24 hour day…

And 1/3 of 100 is 33.3333333333333. There are strong arguments for a base 12 number system (https://en.wikipedia.org/wiki/Duodecimal), and some folks have already put together a base 12 metric system for it. 10 is really quite arbitrary if you think about it. I mean we only use it because humans have 10 fingers, and it’s only divisible by 5 and 2.

That said, the best argument for sticking with base 10 metric is that it’s well established. And base 10 time would make things more consistent, even if it has some trade offs.

I could get used to that, it’s actually a great idea. Though probably impossible to implement due to inertia, at least currently.

This is really neat, but of course people on Lemmy are already talking about using it practically as a replacement for standard clocks…

I swear, I’ve run out of facepalm on the topic of metric vs. ________.

Having a second that is not in line with the definition of the second, which if the most metric thing, is an abomination.

It’s close enough that counting Mississippis is still roughly accurate.

(for non-US people, we sometimes estimate seconds by counting 1 Mississippi, 2 Mississippi, 3 Mississippi… just because it’s a long word that takes about the right amount of time to say)

Your departure form US defaultism is celebrated here, dear person.

As someone who has written a ridiculous amount of code that deals with date and time, I support this 100%.

Great! Now you’ll not only need to convert between timezones but also between metric and standard time.

Also the respective intervals adjusted by leap days and leap seconds will be different!

Yeah but don’t make the units “metric hours/minutes/seconds” for crying out loud. Make another unit of measurement.

US miles, or Scottish, or Swedish, or what? Reusing a word for a different meaning is never the solution.